Figure 1. IoT services (Minovski et al., 2020)

ADCAIJ: Advances in Distributed Computing and Artificial Intelligence Journal

Regular Issue, Vol. 11 N. 3 (2022), 367-394

eISSN: 2255-2863

DOI: https://doi.org/10.14201/adcaij.28821

IoT-Based Vision Techniques in Autonomous Driving: A Review

Mohammed Qader Kheder and Aree Ali Mohammed

Department of Computer, College of Science, University of Sulaimani, Iraq

✉ mohammed.kheder@univsul.edu.iq, aree.ali@univsul.edu.iq

ABSTRACT

As more people drive vehicles, there is a corresponding increase in the number of deaths and injuries that happen due to road traffic accidents. Thus, various solutions have been proposed to reduce the impact of accidents. One of the most popular solutions is autonomous driving, which involves a series of embedded systems. These embedded systems assist drivers by providing crucial information on the traffic environment or by acting to protect the vehicle occupants in particular situations or to aid driving. Autonomous driving has the capacity to improve transportation services dramatically. Given the successful use of visual technologies and the implementation of driver assistance systems in recent decades, vehicles are prepared to eliminate accidents, congestion, collisions, and pollution. In addition, the IoT is a state-of-the-art invention that will usher in the new age of the Internet by allowing different physical objects to connect without the need for human interaction. The accuracy with which the vehicle's environment is detected from static images or videos, as well as the IoT connections and data management, is critical to the success of autonomous driving. The main aim of this review article is to encapsulate the latest advances in vision strategies and IoT technologies for autonomous driving by analysing numerous publications from well-known databases.

KEYWORDS

autonomous driving; AVs; AVs’ sensors; computer vision; Internet of Thing (IoT); Internet of Vehicles (IoV); traffic; accident prevention

1. Introduction

The most serious health problems are caused by traffic collisions. The number of deaths and injuries on the road generally increases as the number of vehicles on the road increases. Every year, about 1.3 million people die as a result of road traffic accidents, with another 20–50 million being disabled or injured (WHO, 2020). Engine industries have actively pursued new technology to increase passenger safety since the dawn of the automobile industry (Varma et al., 2019). Simple mechanical systems, such as turn signals or seat belts, gave way to more advanced ones, namely airbags or pop-up hoods, in the early stages. Later, as electronics progressed, many new developments emerged, for instance, electronic stability control and vehicle brake warning, which provided increased protection by signal processing (Zhao et al., 2021; Cheng et al., 2019). Moreover, some basic emerging systems that offer more passenger protection, such as driver assistance systems based on computer vision techniques, have arisen in the last decade, and several companies have implemented them in their vehicles (Agarwal et al., 2020; Sakhare et al., 2020; Varma et al., 2019).

During the last decade, applications built on mobile devices, sensors, actuators, and other devices, have become smarter, allowing for system connectivity and the implementation of more complex tasks. According to (Sharma, 2021; Litman, 2020; Celesti et al., 2018), the number of connected devices exceeded the global population a few years back, and the number has continued to rise exponentially until now. Wireless sensors, embedded systems, mobile phones, and nearly all other electronic items are now connected to a local network or the Internet, bringing with them the IoT era (Whitmore et al., 2015). The IoT has been around for a few decades and has gained popularity with the advancement of modern wireless technologies (Wang et al., 2021; Lu et al., 2019). Despite there being several different definitions of the IoT, in essence, IoT enables the virtual world to cross the boundaries of the physical world. In addition, the IoT can be described as a global network that connects uniquely specified virtual and physical things, smart objects, and devices, allowing them to communicate with one another (Wang et al., 2021; Fernando et al., 2020; Litman, 2020; Kang et al., 2018). What is more, the IoT paradigm is known as «any-place, any-time, any-one» connected (Litman, 2020). As reported by (Celesti et al., 2018), our daily environment, which includes vehicles, homes, streets, roads, or workplaces, may be connected to smart sensors, actuators, embedded systems, which can communicate with one another through a gateway device.

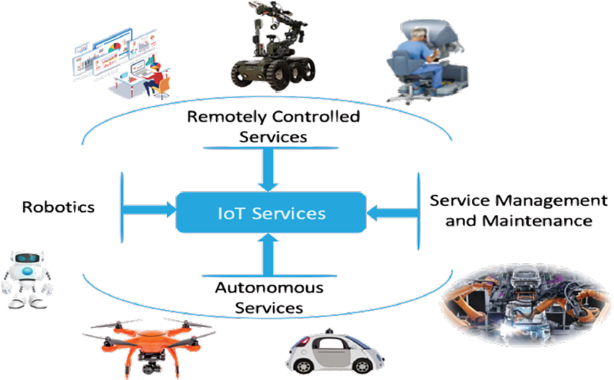

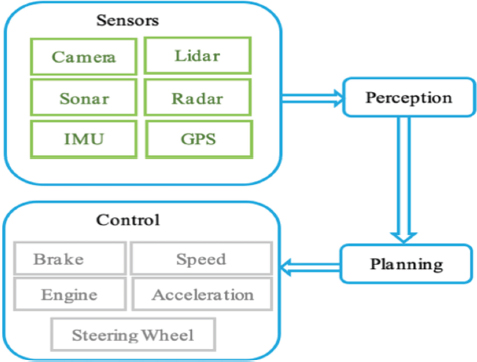

Nowadays, the IoT is a state-of-the-art technology that can be applied to a wide range of uses, including smart cities, smart transportation, and issues associated with deployment (Da Xu et al., 2014, Singh et al., 2014). A few examples of IoT services are shown in Figure 1, regarding robotics, intelligent transportation systems, smart cities, and healthcare. IoT is expected to connect billions of new physical devices to the Internet (Sharma, 2021; Wang et al., 2021; Minovski et al., 2020) and therefore, to grow services with a range of special properties and quality requirements. When all of the smart items connected to the Internet are automobiles, the IoT becomes the Internet of Vehicles (IoV). As a result, the IoV is an expanded application of the IoT for intelligent transportation. It is envisioned to be a key data sensing and processing platform for intelligent transportation systems (Kang et al., 2018).

Figure 1. IoT services (Minovski et al., 2020)

Vehicles that interact with each other, as well as portable devices carried by roadside units, pedestrians, and the public network, use vehicle-to-vehicle (V2V), vehicle-to-human (V2H), vehicle-to-road (V2R), vehicle-to-device (V2D), vehicle-to-pedestrian (V2P), vehicle-to-infrastructure (V2I), vehicle-to-grid (V2G), and vehicle-to-sensor (V2S) interconnectivity to create a network in which the members are smart objects rather than humans (Fernando et al., 2020; Sadiku et al., 2018). The IoV environment is made up of the wireless network environment and road conditions. A vehicle might be a smart sensor platform that collects data from other vehicles, drivers, pedestrians, and the environment and uses it for safe navigation, traffic management, and pollution control (Sakhare et al., 2020). As can be seen, people use vehicles daily. The most serious concern regarding their expanding use is the increasing number of deaths that occur as a result of traffic accidents. The associated costs and risks are acknowledged as important issues confronting modern civilization (Celesti et al., 2018). For several years, researchers have been looking into Vehicular Ad-hoc Network (VANET), which evolved from Mobile Ad-hoc Network (MANET) (Sharma, 2021; Sadiku et al., 2018). Furthermore, because of people’s lifestyle changes, a variety of VANET criteria are proposed. Many real-life scenarios need vehicle networking technology, such as driving on the highway. Drivers want to understand the traffic status on the roads ahead of them and modify their driving path if an accident or traffic congestion happens. There are many different ways that IoT technology and vision techniques are being used in the field of autonomous vehicles, and this review article looks at how these technologies and techniques are used in this field.

The rest of the paper is organized as follows. In Section 2, several theoretical concepts are discussed, including the term «IoT» and its structure, autonomous vehicle technology and various smart sensors, the use of the IoT for autonomous vehicles, and an explanation of how different vision techniques could be used to reduce road traffic accidents. Section 3 highlights some of the IoV challenges that may arise during the design and implementation of the IoV, as well as nine popular existing road vehicle systems that use IoT and computer vision. This research assessed a number of recent studies on the use of IoT technologies and vision methods in various autonomous vehicle applications, as well as compared the results of different autonomous systems employing various vision methods and algorithms, and also which methods should be used to achieve the best results in Section 4. Finally, Section 5 gives the conclusion and recommendations section.

2. Theoretical Concepts

2.1. The Internet of Thing and Its Structure

In 1999, Kevin Ashton proposed the concept of the «Internet of Things» (Hamid et al., 2020). The meaning of «things» has expanded over the last decade as technology has advanced. However, the key purpose remains the same: a digital computer can make sense of information without human interaction (Ravikumar and Kavitha, 2021; Fernando et al., 2020). In addition, according to (Singh et al., 2014), smart sensors, actuators, and an embedded system with a microprocessor are typical components of «things». They have to communicate with one another, building the need for M2M (Machine-to-Machine) communication (Wang et al., 2021; Ahmed and Pothuganti, 2020; Hamid et al., 2020). As reviewed by (Whitmore et al., 2015), the concept of the IoT is developing from M2M connectivity.

According to (Ravikumar and Kavitha, 2021; Fernando et al., 2020; Cheng et al., 2019), wireless technologies, such as Bluetooth, Wi-Fi, and ZigBee, can be used for short-range connectivity; however, mobile networks such as LoRa (Long Range), WiMAX (World Wide Interoperability for Microwave Access), GSM (Global System for Mobile), NB-IoT (NarrowBand-Internet of Things), Sigfox, CAT-M1 (Category M1), GPRS (General Packet Radio Service), 3G, 4G, LTE (Long Term Evaluation), and 5G are suitable for long-range communication. DeviceHive, Kaa, Thingspeak, Mainflux, and Thingsboard are IoT platforms that enable M2M communication via AMQP (Advanced Message Queuing Protocol), MQTT (MQ Telemetry Transport), STOMP (Simple Text Oriented Messaging Protocol), XMPP (Extensible Messaging and Presence Protocol), and HTTP (HyperText Transfer Protocol) (Cheng et al., 2019). These platforms provide node management, monitoring capabilities, data storage and analysis, and other features. Furthermore, based on their characteristics, these protocols may be employed for a variety of IoT applications. However, (Fernando et al., 2020; Cheng et al., 2019; Hanan, 2019) claim that the MQTT protocol is the most popular for IoT devices because of its features, which include the ability to gather data from a variety of electronic devices and provide remote device monitoring. It is also a TCP (Transmission Control Protocol) publish/subscribe protocol that is excellent for lightweight M2M communication over TCP with minimal data loss. Furthermore, the type of publish/subscribe protocol for the IoT reduces the need for clients to request updates, reducing network traffic and processing effort.

Moreover, since data processing occurs in the cloud computing infrastructure, it may sometimes be critical, depending on the application, that some data processing take place in IoT devices rather than a centralized node. Hence, as the processing partially moves to the end network elements, a modern computing model is introduced, which is called edge computing (Hanan, 2019). These devices are not suitable for handling intense processing tasks because they are mostly low-end devices. As a consequence, a fog node is required in the middle (Rani et al., 2021; Hanan, 2019). As stated by (Rani et al., 2021; Hanan, 2019; Kang et al., 2018), fog nodes have many characteristics, including sufficient resources, the ability to perform advanced computing tasks, the ability to be physically placed near end network components, and the ability to mitigate the overload generated by massive data transfer to any central cloud node. Furthermore, they assist IoT devices with big data handling by giving computing, storage, and networking services. Finally, the data is stored on a cloud server, where it is available for deep processing using a range of machine learning algorithms, as well as collaboration with other devices. Nowadays, many IoT applications are available for use. Table 1 discusses several common IoT applications:

Table 1. Common IoT applications

No. |

Application’s Name |

Details |

1 |

Smart Transportation |

Smart physical sensors and actuators can be integrated into vehicles, as well as mobile applications and devices installed in the area. It is possible to have customized route recommendations (Elbery et al., 2020), economic street lighting (Sastry et al., 2020), quick parking reservations (Ahmed and Pothuganti, 2020), accident avoidance (Choubey and Verma, 2020; Celesti et al., 2018), automated driving (Rahul et al., 2020), and other services. |

2 |

Smart Homes |

Contains conventional devices such as washing machines, refrigerators, light bulbs, a smart door that opens and locks, and other appliances that have been built and can connect with licensed users through the Internet, allowing for improved system control and maintenance as well as energy usage optimization (Zaidan and Zaidan, 2020). |

3 |

Security and Surveillance |

Often, companies have monitoring and surveillance systems, and they have smart cameras that can collect video feedback from the road or a particular location. Smart detection technologies could be able to find criminals or avoid dangerous situations by recognizing objects in real time (Sultana and Wahid, 2019). |

4 |

Health-Care Assistance |

Smart devices for health-care assistance are being built to improve a patient's well-being. Plasters with remote sensors also track the status of a wound and report the data to experts without requiring physical examination (Philip et al., 2021; Banerjee et al., 2020). According to (Philip et al., 2021), sensors such as wearable devices or small inserts would be able to detect and monitor a wide range of parameters, including blood oxygen and sugar levels, heart rate, or temperature. |

5 |

Environmental Conditions Monitoring |

A network of remote sensors spread across the city provides the ideal infrastructure for monitoring a wide range of environmental conditions. Several weather sensors, such as barometers, ultrasonic wind, and humidity sensors, can assist in the construction of advanced weather stations (Lex et al., 2019). Several smart sensors are also able to track the city's air quality and water pollution levels (Srivastava and Kumar, 2021; Lex et al., 2019). |

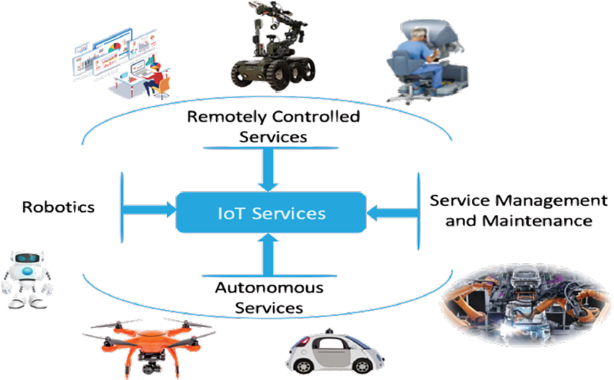

The IoT can be thought of as a vast network made up of subnetworks of connected devices and computers interconnected by some intermediaries, with various technologies such as Radio Frequency Identification (RFID), barcodes, and wired and wireless connections acting as network enablers (Sharma, 2021; Da Xu et al., 2014). The International Telecommunication Union (ITU) defines four dimensions of things as views of the IoT framework, as shown in Figure 2.

Figure 2. The 4th IoT dimensions (ITU)

• Tagging Things- RFID is at the forefront of the IoT vision because of its real-time thing traceability and addressability. RFID serves as an automated barcode to better distinguish something that is connected automatically (Whitmore et al., 2015; Da Xu et al., 2014). Active RFID, which has a battery on board and is often used in healthcare, retail, and facilities management, and passive RFID, which does not have a battery and is powered by the reader and is widely used in road toll tags and bank cards, are the two varieties of RFID (Elbasani et al., 2020; Da Xu et al., 2014; Singh et al., 2014).

• Felling Things- smart sensors and actuators serve as the key instruments for collecting data from the environment. Communications are built to connect the physical and information worlds, so that data may be obtained (Hirz and Walzel, 2018). Recent technical advances have resulted in high performance, low power consumption, and low cost.

• Shrinking Things- nanotechnology and miniaturization have enabled smaller things to communicate in things or smart devices. The key benefit is an increase in quality of life, such as the use of nano-sensors to track water quality at a lower rate (Shashank et al., 2020), as well as in healthcare (Li et al., 2021; Whitmore et al., 2015), for example, the use of nano-sensors in cancer detection and treatment.

• Thinking Things- embedded intelligence in devices via smart sensors has formed the network connection to the Internet. Local electric applications can be developed to achieve intelligent control, such as refrigerators that can detect the quantity of different items and the freshness of perishable items. It may also be possible to communicate with clients by sending them warnings over the Internet.

2.2. Autonomous Vehicles and Sensors

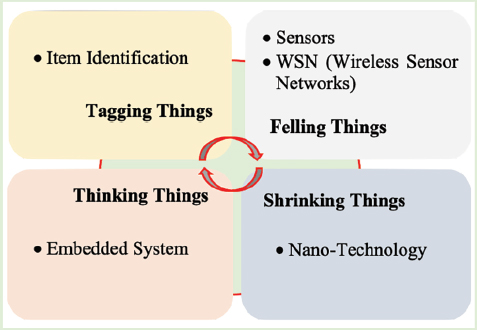

Autonomous Vehicles (AV): It is a vehicle that can operate without human intervention (Hamid et al., 2020; Litman, 2020). It is any vehicle that has features that allow it to accelerate, brake, and steer without the need for human intervention. According to (Ravikumar and Kavitha, 2021; Hamid et al., 2020; Litman, 2020), the concept of AVs dates back to the 1930s, when science fiction visualized and innovated AVs as a new challenge for the automotive industry. Several leading automotive manufacturers around the world, including Audi, Ford, Volvo, Nissan, and BMW, have started to build autonomous vehicles (Ahmed and Pothuganti, 2020; Lu et al., 2019). Figure 3 depicts the evolution of AVs from 1998 to 2018.

Figure 3. The development of AVs from 1998 to 2018 (Lu et al., 2019)

The AVs use various technologies and sensors, including adaptive cruise control, anti-lock brakes (brake by wire), active steering (steer by wire), lasers, radar, and GPS navigation technology (Ravikumar and Kavitha, 2021; Hamid et al., 2020; Litman, 2020; Lu et al., 2019). As a result, AVs have been targeted for their ability to reduce pollution and dust particles, prevent accidents, improve vehicle safety, reduce fuel consumption, create new potential market opportunities, and free up driver time (Ravikumar and Kavitha, 2021; Ahmed and Pothuganti, 2020). Figure 4 represents the AV system’s block diagram, which is divided into four categories (Kocić et al., 2018).

Figure 4. The block diagram of the AV’s system (Kocić et al., 2018)

According to the estimations of (Ahmed and Pothuganti, 2020; Lu et al., 2019), nearly 10 million autonomous vehicles will be on the road in the next few years. (Ahmed and Pothuganti, 2020; Litman, 2020; Lu et al., 2019) divides two categories of AVs: semi-autonomous and fully-autonomous, as described below:

1 Semi-Autonomous- can accelerate, steer and brake, keeping the distance from the car in front and also keeping the lane speed up. However, the driver is still needed and is still in full control.

2 Fully-Autonomous- capable of driving from a starting point to a fixed destination without the driver’s intervention. This form of AV is expected to debut in the coming years.

AVs can have several benefits; however, they may also entail many costs: internal (affecting the driver) and external (affecting other people). As a result, when preparing for autonomous vehicles, all of these factors should be taken into account (Ravikumar and Kavitha, 2021; Litman, 2020). The table below shows the possible benefits and drawbacks of autonomous vehicles, which should be carefully thought about before they are used in a project.

Table 2. AVs benefits and drawbacks (Litman, 2020)

|

Benefits |

Drawbacks |

Internal |

The stress level of drivers has decreased, and their productivity has increased. |

Vehicle prices have gone up because more equipment, services, and taxes have been added to vehicles. |

More independent mobility for non-drivers can minimize chauffeuring responsibilities and transit subsidy demands for motorists. |

More accidents are caused by increased user hazards, including system failures, platooning, higher traffic speeds, increased risk-taking, and increased overall vehicle travel. |

|

Reduced paid driver costs- this lowers the cost of taxis and commercial transportation drivers. |

Features such as location tracking and sharing data may make it hard to keep your data safe and private. |

|

External |

Increased safety may reduce the likelihood of a collision and insurance costs. |

Increased infrastructure costs may necessitate stricter standards for roadway construction and maintenance. |

Increased road capacity and cost savings from more efficient traffic could cut down on congestion and road costs. |

Additional dangers- situations that may endanger other road users and be used for illegal purposes. |

|

Parking costs are reduced, which means less demand for parking at locations. |

Congestion, pollution, and sprawl-related expenses may all rise as a result of better automobile travel. |

|

Reduced energy usage and pollutants might help to improve fuel economy and reduce pollution. |

Concerns about social equality- walking, biking, and public transportation would continue to be the cheapest ways to get around. |

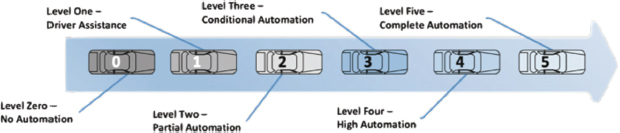

According to the SAE (Society of Automotive Engineers) organization, vehicles are classified into six levels in terms of autonomous vehicles, as indicated in Figure 5, starting at level zero and ending at level five (Hamid et al., 2020).

Figure 5. The journey of automation to fully AVs (Hamid et al., 2020)

At Level 0 (no automation), people do all the complicated driving operations such as accelerating or slowing down, steering, braking, and so forth. Level 1 (driver assistance): acceleration or deceleration aid, or steering based on knowledge of driving circumstances. Level 2 (partial automation): automatic acceleration and deceleration, as well as steering, are combined. Level 3 (conditional automation): a driving mode in which an automated driving system reacts exactly to a request when the driver replies. Level 4 (high automation): In some cases, even if a person does not reply to a request, vehicle automation can perform all driving tasks. Level 5 (full automation): the whole automation system of the vehicle is capable of performing all driving activities in any circumstances (Hamid et al., 2020). By using a lot of different devices and smart sensors and actuators to run and manage a driverless car at Levels 4 and 5, AVs can do all of the things that people do when they drive.

Sensors: As claimed by (Hamid et al., 2020; Hirz and Walzel, 2018; Da Xu et al., 2014), sensors are the most important components of AVs. Thus, AV would be impossible without sensors, which allow the vehicle to see and feel everything on the road while also gathering the necessary data to drive safely. There are several smart sensors: video cameras, lidar, radar, sonar, a global positioning system (GPS), the inertial measurement unit (IMU), wheel odometry, and other smart sensors and actuators that can all be part of autonomous vehicles (Kocić et al., 2018). Sensors can gather data, which is subsequently analyzed by the AV’s computer and utilized to monitor the vehicle’s steering, speed, and braking (Hamid et al., 2020; Kocić et al., 2018). More to say, data collected by sensors in AVs, such as traffic jams, the actual path ahead, and any road obstacles, can be exchanged among vehicles linked by M2M technology, a process known as V2V (vehicle-to-vehicle) communication (Singh et al., 2014). Several AVs’ sensors are covered in the table below and references are provided to the reviewed articles:

Table 3. Common sensors in autonomous vehicles

No. |

Article(s) |

Sensor |

Sensor Details |

1 |

(Cao et al., 2019; Koresh and Deva, 2019; Minhas et al., 2019;) |

Camera |

Detects real-time obstacles to allow for lane departure and road sign information. The captured image contains a large number of values for individual pixels; however, these figures are almost useless. Therefore, computer vision algorithms should be used to convert low-level image information into high-level image information. |

2 |

Ultrasonic |

It is typically used to detect obstacles over small distances, for example, to assist drivers with parallel parking or using auto-parking devices. It also uses a high-frequency sound wave that bounces back to measure the objective distance of a vehicle. Ultrasonic technology sends out sound waves at a frequency of 50 kHz and waits for a response. |

|

3 |

Radio Detection and Ranging (RADAR) |

RADAR emits radio waves that detect depth over short and long distances. They are dotted around the vehicle to monitor the position of the vehicle nearby. By calculating the difference in frequency of returned signals generated by the doppler effect, this sensor can detect the distance and speed of moving objects. In addition, it is built into vehicles for a number of different reasons, such as adaptive cruise control, blind-spot detection, and collision avoidance or warning. |

|

4 |

Light Detection and Ranging (Lidar) |

Lidar determines the distance between the sensor and the nearest object using an infrared laser beam. It uses active pluses of light from the vehicle's surroundings to sense route boundaries and distinguish lane markers. The most modern lidar sensors use light with a wavelength of 900 nm, while some lidars use longer wavelengths that perform better in rain and fog. |

|

5 |

Dedicated Short-Range Communications (DSRC) |

DSRC is a collection of standards and protocols for one-way or two-way short-range to medium-range wireless communication networks specifically intended for automotive vehicle use. It can be used for 4G, Wi-Fi, Bluetooth, and others for V2V, V2I (Vehicle-to-Infrastructure) communication, and V2X (Vehicle-to-Everything). |

|

6 |

(Choubey and Verma, 2020; Elbery et al., 2020; Celesti et al., 2018) |

Global Positioning System (GPS) |

GPS receivers allow autonomous vehicles to maneuver without the need for human input. AVs can automatically sense, store, and retrieve data about their surroundings while other technologies such as laser light, radar, and computer vision are integrated. However, more sophisticated techniques are required for autonomous vehicles to execute certain activities that people can perform, such as parking (Ahmed and Pothuganti, 2020), recognizing road signs (Choubey and Verma, 2020), and detecting diverse vehicles (Xiang et al., 2018) on the road. |

2.3. IoT in Autonomous Driving

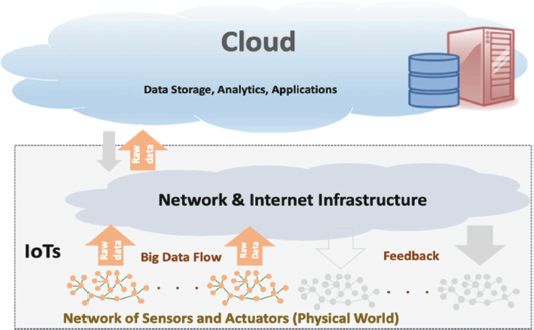

The term «automotive IoT» refers to embedding IoT technology into automotive systems to create modern applications that make the vehicle more intelligent, more powerful, and safer to drive (Celesti et al., 2018; Sadiku et al., 2018). The concept of IoT in the automotive industry could be summarized into three main divisions: connectivity among vehicles, which is related to connectivity from one vehicle to another (V2V), connectivity between vehicles with external infrastructure (V2I), and connectivity with external hardware and devices (V2D). Taking this concept further, a connected vehicle is a vehicle that includes a platform which allows for the exchange of information between the vehicle and its surroundings using local wireless, the Internet, or smart sensors (Minovski et al., 2020). Wang et al., 2021, state that a typical IoT platform is an integrated system capable of supporting hundreds of millions of simultaneous device communications to generate large amount of data to be transferred and processed in cloud computing. In addition, according to (Fernando et al., 2020; Hamid et al., 2020; Rahul et al., 2020), a typical IoT platform contains four main components, as shown in Figure 6, all of which are concerned with the IoT autonomous vehicle platform: Smart sensors and hardware devices are important components that collect various data types from the real world, as well as a communication network component that is typically built on wireless technology, namely cellular technologies (3G, 4G, and 5G) or Wi-Fi, and a big data component that represents the amount of data being generated; this data should be transmitted, stored, and processed; and the cloud component where the data will be stored and processed because it provides some processing, analytics, and storage services. As reviewed by Da Xu et al., 2014, traditional IoT applications are hosted in the cloud and can provide input and make choices for physical systems. In IoT AVs, on the other hand, the cloud will serve as the centralized administration system for all software components and monitoring tools (Hamid et al., 2020; Celesti et al., 2018).

Figure 6. Typical components of the IoT platform (Hamid et al., 2020)

IoT for AVs is transforming the transportation system into a global variety of vehicular networks. Smart vehicle monitoring, dynamic information systems, and software to minimize insurance costs, road congestion, and potential collisions are just a few of the advantages of the IoT. There are two different data-gaining modules in the IoT AV platform. To begin with, collect data from personal sensors and share it with others in the neighbourhood. Second, the IoT platform serves as a repository for massive amounts of data received from various gateways (e.g., traffic information, environmental information, parking, and transportation) and connected devices (e.g., rail, traffic lights, a car, working areas, parking spots, entertainment, pedestrians, weather conditions, other vehicles, and so on) (Choubey and Verma, 2020).

2.4. Road Traffic Accident Prevention using Vision Techniques

Road traffic accidents, which are one of the leading causes of injuries and deaths in the world, have been exacerbated by the rapid rise in the number of vehicles on the road. As a result, these numbers will cause more and more traffic accidents on the road (Uma and Eswari, 2021; Agarwal et al., 2020). According to (Uma and Eswari, 2021; Agarwal et al., 2020; Choubey and Verma, 2020), the trigger factors are classified into two categories: human and environmental, with the human aspect being far more responsible for the number of accidents. As reported by the National Highway Traffic Safety Administration (NHTSA), 85 percent of all road accidents happened in developing countries. Moreover, (Agarwal et al., 2020; Celesti et al., 2018) discuss that a lack of attention due to driver fatigue or drowsiness was a factor in one out of every three crashes in 2019, while drugs, alcohol, or drunk driving, and interruption were factors in one out of every five accidents. As claimed by (Uma and Eswari, 2021; Celesti et al., 2018) the following are the most frequent causes of traffic accidents:

• Frontal crashes occur when two vehicles traveling in opposite directions intersect. Although different types of mechanisms exist that can reduce the number of injuries that a frontal vehicle collision can cause, it may be seen that computer vision strategies have the ability to prevent a potential or immediate automotive collision. Using one or more frontal video cameras, the vehicle would be able to identify a potentially risky situation and respond appropriately, such as with an alert or sound (Uma and Eswari, 2021; Agarwal et al., 2020). Moreover, as claimed by (Hamid et al., 2020; Minovski et al., 2020), a variety of sensors, including lidar and radar, can assist cars in avoiding frontal collisions by monitoring the environment around them.

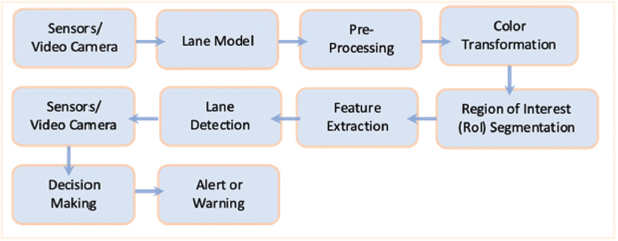

• Lane departure collisions occur when a driver changes lanes, causing the vehicle to crash with another vehicle in a similar lane. (Dhawale and Gavankar, 2019; Narote et al., 2018; Zang et al., 2018) state that the computer vision techniques greatly reduce the number of injuries caused by lane departure collisions. If the driver is distracted, the system can effectively complement the risky behaviour in similar ways, such as changing lanes and focusing the driver’s focus. Furthermore, since the device is installed in the car, drivers are more careful to avoid crossing the lane marker as a result of any form of warning, such as an audible alert, a vibrating driver seat, a graphical note, and others (Olanrewaju et al., 2019; Zang et al., 2018). The following figure 7 is a block diagram of a lane departure collision.

Figure 7. The block diagram of lane departure collision (Dhawale and Gavankar, 2019; Zang et al., 2018)

• Surrounding vehicles: If the drivers are not aware of them, they can be dangerous. A driver’s capacity to identify surrounding vehicles is considerably reduced when he/she is tired or fatigued. This can lead to a collision in situations such as driving in a convey, changing lanes, parking, and other similar situations (Agarwal et al., 2020). Depending on the complexity of the system that monitors the surrounding vehicles, appropriate action, such as sending an alert, is taken, depending on the actual vehicle condition and the situation anticipated in the immediate future. As believed by (Chetouane et al., 2020; Xiang et al., 2018), the main issue with this vision method is the incorrect estimation of close vehicles. Since vehicles come in a variety of colors and sizes, this can lead to inaccurate estimates and unsafe driver behavior, endangering other drivers and road users.

• Road signalization: The key factor in traffic safety is road signalization. Drivers must be able to identify and detect traffic signals. The frequency of casualties caused by this traffic problem can be reduced if a suitable road signalization detection and a driver assistance device are employed (Ciuntu and Ferdowsi, 2020; Li et al., 2020; Narote et al., 2018). The technology notifies the driver if they make a potentially dangerous movement.

3. Study Conducted

In this section, the study discusses many IoV challenges that should be avoided while doing research on AVs, as well as some popular current AV applications.

3.1. The Internet of Vehicles’ Challenges

While, after many years of research and development, AVs have become a reality, there are still many challenges in fully developing autonomous systems for engineering technologies, regulatory difficulties, a lack of industry-standard technology and equipment, consumer confidence and acceptance, and so on. These are all examples of AVs (Hamid et al., 2020; Whitmore et al., 2015, Singh et al., 2014). The challenges get more complex as the degree of autonomy increases. Security and privacy, big data, reliability, road conditions or environments, mobility, and open standards are among the problems facing IoV and delaying its acceptance. These challenges ought to be addressed for IoV to become very reliable and broadly accepted (Hamid et al., 2020; Sadiku et al., 2018).

• Security and privacy are essential because the IoVs need the integration of several different systems, standards, and services (Garg et al., 2020; Whitmore et al., 2015). IoV can be a target for cyber-attacks and intrusions because it is an open, public network. This can lead to physical and personal information being leaked (Whitmore et al., 2015).

• Big Data-due to the huge number of linked vehicles, one of the most difficult challenges of the IoV is the collection and storing of big data (Garg et al., 2020; Sadiku et al., 2018). As stated by (Hamid et al., 2020) four data aspects are explored in AVs: data collection, data handling, data management, and data labelling. In order to handle big data, big data analytics, and mobile cloud computing, it would be crucial.

• Reliability vehicles, smart sensors and actuators, and network hardware all have the potential to fail. The system should be able to handle erroneous data as well as unreliable communications, such as DoS attacks. In general, vehicle protection takes precedence over entertainment (Choubey and Verma, 2020; Sadiku et al., 2018).

• Road Condition-the state of the roads is highly variable and varies greatly from one location to the next (Ng et al., 2019; Narote et al., 2018). For example, some areas have smooth and well-marked large highways, while others have deteriorated road conditions with no lane marking (Hamid et al., 2020). Lanes are not well marked, there are potholes, and there are mountainous and tunnel roads with a lack of well-defined external path signals (Dhawale and Gavankar, 2019).

• Mobility-in a situation where vehicles move quickly and network topology changes often, it can be difficult to keep nodes connected and provide them with the services they need to send and receive data in real-time (Sadiku et al., 2018).

• Open Standards-interoperability and standardization are needed to drive adoption. According to (Garg et al., 2020; Sadiku et al., 2018), a lack of standards makes successful V2V collaboration impossible, although implementation of open standards facilitates sharing of information. In addition, governments should work with businesses to implement technical best practices and open international standards, as well as encourage them to work together, too.

There are also many recent AV problems, such as weather conditions, traffic conditions, accident liability, radar interference, vehicular communication, and others, all of which affect the AV's efficiency.

3.2. Existing Road Vehicle Systems

In today’s world, there are lots of initiatives utilizing computer vision methods and IoT in road vehicles. The major purpose of these initiatives is to build driver state recognition, and road infrastructure recognition modules for autonomous vehicles (Sharma, 2021; Ahmed and Pothuganti, 2020; Litman, 2020). According to (Litman, 2020; Lex et al., 2019), using IoT applications in the automotive industry aids in design by increasing performance, lowering costs, and allowing for quality control. Table 4 contains a list of existing systems that have been evaluated in a variety of publications; however, more recent articles have been selected.

Table 4. Existing autonomous vehicles’ systems

No. |

Papers (s) |

Proposed System’s Name |

Proposed System’s Details |

1 |

Accident Detection/ Prevention |

The main aim of this AV system is to detect accident-prone areas to minimize the number of accidents and traffic congestion. It can also notify drivers of crucial situations, allowing them to take action quickly. |

|

2 |

(Ciuntu and Ferdowsi, 2020; Li et al., 2020; Zhang et al., 2020; Cao et al., 2019; Koresh and Deva, 2019) |

Real-time Traffic Sign Detection |

Traffic-sign recognition aims to identify lots of types of traffic signs put on the road, such as speed limits, turn ahead, school, children, and others that assist drivers to keep safe driving. To detect and recognize distinct traffic signs, this type of system uses image processing algorithms. Furthermore, traffic sign detection may be achieved in two stages: color segmentation and classification. |

3 |

(Liu et al., 2020; Savaş and Becerikli, 2020; Minhas et al., 2019; Eddie et al., 2018; Izquierdo et al., 2018; Kumar and Patra, 2018) |

Driver Fatigue Detection |

This system is used to detect oncoming driver fatigue, and the issue of timely warning can aid in avoiding lots of accidents, and consequently reducing personal suffering. According to international statistics, driver fatigue causes a large number of road accidents; therefore, it plays a big role in AVs. |

4 |

(Ahmed and Pothuganti, 2020; Chetouane et al., 2020; Wei et al., 2019; Hsu et al., 2018; Tsai et al., 2018; Xiang et al., 2018) |

Vehicle Detection |

There are a variety of autonomous sensors that may be used to identify nearby vehicles. This would allow drivers to be aware of what is going on around them. The optical camera is used in many types of research, which has a challenge due to vehicle shape, size, color, and environmental appearance. Knowledge-based, stereo-vision, and motion-based vision approaches are all employed to identify vehicles. |

5 |

(Khalifa et al., 2020; Kim et al., 2020; Pranav and Manikandan, 2020; Preethaa and Sabari, 2020; Zahid et al., 2019; Qu et al., 2018) |

Pedestrian Detection |

Detecting objects in an image that are people has a long history; pedestrian identification has advanced significantly in the previous decade. To assure safe behavior in certain environments, autonomous vehicles must be able to detect and avoid pedestrians. However, automatically identifying human forms using a visual system is an extremely advanced process. Accurate pedestrian detection might have an immediate effect on vehicle safety or other applications. |

6 |

Lane Detection and Departure Warning |

Every autonomous vehicle requires lane recognition and departure warning, and it’s very beneficial for the driver’s assistance. Due to the various difficulties that this type of system faces, such as limited visibility of the lane line, vagueness of lane patterns, shadows, brightness, light reflection, and others, it is a very difficult issue. Lane detection is achieved via two steps: image enhancement and edge detection; and extraction of lane characteristics; and a calculation of road form from the processed image. |

|

7 |

Road Anomalies Detection |

Anomalies on the road cause discomfort to drivers and riders, and they can lead to mechanical failure or even injuries. Since road conditions have an immediate effect on many facets of transportation, road anomaly detection plays an important role in smart transportation. The main goal of this system is to identify bumps and potholes on the road and warn drivers, thus reducing vehicles injuries, road accidents, and traffic congestion. |

|

8 |

Smart Parking |

Smart parking applications are developed to efficiently track available parking lots and provide drivers with a variety of reservation options. It uses an IoT-based system that sends data about available and occupied parking spots through the web or mobile applications. Several studies have used image data to detect free parking spots in large numbers, using a variety of machine learning algorithms. Each parking spot contains several IoT devices, such as smart sensors and microcontrollers, which are used to detect the presence of a car in a parking space and send the data to a centralized system. The drivers receive a real-time report on the availability of all parking spots and choose the best one. |

|

9 |

Route Optimization and Navigation |

In cities, traffic congestion is a frequent problem that is only growing worse as the number of vehicles increases. To decrease traffic congestion, a route optimization and navigation program may be used to suggest the optimal path for a certain location. Both vehicle emissions and travel time are reduced as traffic congestion is reduced. This system requires all necessary information to be contained, such as the number and location of all necessary stops along the route, as well as delivery time windows. |

4. Research Findings and Discussion

4.1. Literature Survey on Various IoT Technologies used in Autonomous Driving

This section of the study contains details on a variety of ongoing IoT research projects in AVs. They employed a range of IoT technologies to tackle specific challenges, as seen in Table 5.

Table 5. Survey on different IoT technologies used in AVs

No. |

Paper(s) |

System’s Name |

Used Technology(s) |

Pros |

Cons |

||||

1 |

Accident Detection/ Prevention |

• GPS, GPRS, GSM, GPS TK103 and 4G • Arduino Mega 2560 • Ultrasonic Sensor • LM35 Temperature • ADXL335 Acc |

• The accident rate can be reduced • Notify drivers while detecting an obstacle |

|

• Require 100% reliability and low maintenance |

||||

2 |

(Ciuntu and Ferdowsi, 2020; Li et al., 2020; Zhang et al., 2020; Cao et al., 2019; Koresh and Deva, 2019) |

Real-time Traffic Sign Detection |

• Video Camera |

• Improve the driver's road safety, especially when he/she is tired or has missed signs • It is crucial when you are driving somewhere you have never been before |

|

• During busy hours, they cause traffic congestion by stopping vehicles at crossroads • During peak hours, when signals fail, there are significant and widespread traffic problems |

||||

3 |

(Liu et al., 2020; Savaş and Becerikli, 2020; Minhas et al., 2019; Eddie et al., 2018; Izquierdo et al., 2018; Kumar and Patra, 2018) |

Driver Fatigue Detection |

• CCD Micro Camera with Infrared Illuminator • CCD Camera • Digital Video Camera • IR Camera |

• The detection of inappropriate behaviour is solved in real-time through alerts • The driver’s life can be protected if he or she is alerted while driving in a dangerous situation |

|

• Accidents can be reduced to preserve traffic management • Due to multiple sensors located near the driver’s eyes, the driver’s body is suffering from health problems |

||||

4 |

(Ahmed and Pothuganti, 2020; Chetouane et al., 2020; Wei et al., 2019; Hsu et al., 2018; Tsai et al., 2018; Xiang et al., 2018) |

Vehicle Detection |

• Raspberry pi B+ • PI CAM • Ultrasonic sensor (HC-SR07) • UAV Technology • LCD Display |

• Detect and identify objects that surround the vehicle, giving drivers an excellent sense of his/ her surroundings |

|

• Vehicles come in a variety of shapes and colors. This can lead to inaccurate vehicle estimates and incorrect driver behaviours, putting other drivers and road users at risk |

||||

5 |

(Kim et al., 2020; Khalifa et al., 2020; Pranav and Manikandan, 2020; Preethaa and Sabari, 2020; Zahid et al., 2019; Qu et al., 2018) |

Pedestrian Detection |

• Radar • Lidar • Optical Camera • Infrared Radiation |

• It aids the driver's ability to the drive safely • Stay safe pedestrian’s life while walking on the road |

|

• Traffic jams are caused by failed pedestrian detection |

||||

6 |

Lane Detection and Departure Warning |

• Color Detection Sensor • Radar • Optical Camera |

• Minimizing the number of auto accidents |

|

|

• Vehicles are causing problems for vehicles around it |

||||

7 |

Road Anomalies Detection |

• IoT Sensors • Arduino Uno • MPU6050 3-axis Accelerometer • Optical Camera |

• Avoid vehicle accident • Protect vehicle engineering • Monitoring the road while driving |

|

|

• You navigate the dashboard, receive reports, and receive alerts to make change |

||||

8 |

Smart Parking |

• Ultrasonic Sensor • Web Service • FIWARE • Mobile Phones/ Apps |

• Reduces cruising time • Smart parking as a solution to traffic woes |

|

|

• It is not recommended for high peak hour volume facilities • Requires a maintenance |

||||

9 |

Route Optimization and Navigation |

• GPS • Smart Phones • Google Map |

• Improve customer satisfaction • Reduce operational costs • Save time in the scheduling of transportation |

|

|

• Missing traffic data due to detector/ communication failure |

4.2. The Comparison of Several Autonomous Systems’ Results using Various Vision Techniques

One of the most significant characteristics of the system’s effectiveness is the use of visual techniques. As a result, this review article summarizes the findings of several studies that used vision techniques in their proposed systems. Some of the above-mentioned systems, such as driver fatigue detection, real-time traffic sign detection, vehicle detection, pedestrian detection, and lane detection and departure, have been chosen since they are the most often used in AVs daily, and also several review articles have been chosen due to the best outcomes. In the following tables, the results of these systems are displayed. Then, recommendations on the best techniques or methods to use for each system are given. In addition, Confusion Matrix, F1-score, PERCLOSE (Percentage of Eye Closure), LogLoss (Logarithm Loss), IoU (Intersection over Union), AUC (Area Under Curve), ROC (Receiver Operating Characteristic), MAE (Mean Absolute Error), MSE (Mean Squared Error), MRR (Mean Reciprocal Rank), DCG (Discounted Cumulative Gain), NDCG (Normalized Discounted Cumulative Gain), etc., are some of the metrics that can be used to evaluate the proposed method's performance. Because each of them has its own math equation and work, the researchers should carefully pick one or a few of them to assess their systems. Also, some systems will produce the best outcomes for some of them while being bad for others.

Table 6-a: Outcomes of driver fatigue detection system

No. |

Paper |

Year |

Technique(s) |

Performance Metric(s) |

Algorithm(s) |

Overall Accuracy |

1 |

2018 |

• Electroencephalogram (EEG) • Camera • FACET Model |

PERCLOSE and F1-score |

• Gaussian Mixture Model |

89% |

|

2 |

2018 |

• Percentage of Eye Closure (PERCLOS) • Artificial Vision |

PERCLOSE |

• Viola Jones Algorithm • Support Vector Machine (SVM) |

93.3% |

|

3 |

2019 |

• Facial Landmark Marking • Head Bending • Yawn Detection |

Confusion Matrix |

• Histogram of Oriented Gradient (HOG) • Linear SVM |

Bayesian Classifier: 85% |

|

FLDA: 92% |

||||||

SVM: 95% |

||||||

4 |

2019 |

• Steering Pattern Recognition • Vehicle Position in the Lane Monitoring • Driver’s Eye or Face Monitoring • Physiology Measurement |

Not Available |

Convolutional Neural Network (CNN) |

98.5% |

|

5 |

2020 |

• PERCLOS • Yawning Frequency/Frequency of Mouth (FOM) |

PERCLOSE |

• Multi-Task ConNN |

98.81% |

According to the results, combining PERCLOS with FOM techniques and the ConNN deep learning algorithm produces the best results when compared to the alternatives.

Table 6-b. Results of real-time traffic sign detection system

No. |

Paper |

Year |

Technique(s) |

Performance Metric(s) |

Algorithm(s) |

Overall Accuracy |

1 |

2019 |

• Color Space for Traffic Sign Segmentation • Shape Features for Traffic Sign Detection |

Confusion Matrix |

• LeNet-5 CNN |

99.75% |

|

2 |

2019 |

• Color-based Segmentation • Hough Transform |

Not Available |

• Speeded up Robust Features (SURF) |

57% |

|

|

• Scale-Invariant Feature Transform (SIFT) |

40% |

|||||

|

• Binary Robust Invariant Scalable Keypoints (BRISK) |

80% |

|||||

|

• CNN |

88% |

|||||

|

• Capsule Neural Network (CapsNet) |

98.2% |

|||||

3 |

2020 |

• Onboard Camera • Optical Character Recognition (OCR) |

Confusion Matrix |

• Mask R-CNN |

93.1% |

|

4 |

2020 |

• Dynamic Threshold Segmentation (DTS) • Location Detection (LD) • Region of Interest (ROI) |

Confusion Matrix and IoU |

• SVM based on HOG |

97.41% |

|

5 |

2020 |

• Dot-Product and SoftMax • Feature Pyramids • RoI |

Not Available |

• Multiscale Cascaded R-CNN |

99.7% |

The findings demonstrate that the LeNet-5 CNN algorithm, which uses color space for traffic sign segmentation and shape features for traffic sign detection, achieves the best results.

Table 6-c. Outcomes of vehicle detection system

No. |

Paper |

Year |

Technique(s) |

Performance Metric(s) |

Algorithm(s) |

Overall Accuracy |

1 |

2018 |

• Concatenated ReLU (C. ReLU) • Modified Inception • Hypernet • PVANET (Technique Used) |

Not Available |

• CNN |

Over 90.3% |

|

2 |

2018 |

• Cameras • RoI |

Confusion Matrix and IoU |

• Fast R-CNN |

88.085% |

|

3 |

2019 |

• RoI • Precise Extraction |

Confusion Matrix and ROC Curve |

• Combined Harr Classifier with HOG • AdaBoost • SVM Classification |

97.96% |

|

4 |

2020 |

• R-CNN Architectures • Single Shot Detectors (SSD) • You Only Look Once (YOLO) |

Not Available |

• Fast R-CNN |

99.6% |

|

5 |

2020 |

• Motion-based Approaches • Object-based Approaches |

Confusion Matrix |

• Gaussian Mixture Model (GMM) |

75% |

|

|

• GMM-Kalman Filter |

70% |

|||||

|

• Optical Flow |

73% |

|||||

|

• Aggregate Channel Features (ACF) |

95% |

In comparison to the other algorithms, the results show that employing Fast R-CNN as an algorithm with SSD and YOLO techniques produces the greatest results in vehicle detecting systems.

The pedestrian detection system can get improved results by combining CNN and MobileNets algorithms with the approaches R-CNN, SSD, and YOLO, as shown in Table 6-d.

Table 6-d. Findings of pedestrian detection system

No. |

Paper |

Year |

Technique(s) |

Performance Metric(s) |

Algorithm(s) |

Overall Accuracy |

1 |

2018 |

• Color image conversion • Retinex image enhancement |

MAE |

• YOLOv3 |

94% |

|

2 |

2019 |

• Camera • Region-Based Convolutional Neural Network (RCNN) • SSD • YOLO |

Confusion Matrix and IoU |

• CNN • MobileNets |

99.99% |

|

3 |

2020 |

• Optical Camera • HOG • Hybrid Metaheuristic Pedestrian Detection (HMPD) |

Confusion Matrix and F1-score |

• SVM • CNN |

98.5% |

|

4 |

2020 |

• HOG • Feature Vector of Image |

Confusion Matrix and F1-score |

• SVM-Based Enhanced Feature Extraction (SVMEFE) • HMPD |

98.85% |

|

5 |

2020 |

• Camera (Real-time video captured) |

Not Available |

• CNN |

• Min: 96.73% • Max: 100% |

Table 6-e. Results of lane detection and departure system

No. |

Paper |

Year |

Technique(s) |

Performance Metric(s) |

Algorithm(s) |

Overall Accuracy |

1 |

2018 |

• Random Sample Consensus (RANSAC) • Kalman Filters • Gaussian Sum Particle Filter (GSPF) • Geometric Overture for Lane Detection by Intersections Entirety (GOLDIE) |

Confusion Matrix |

• Fully CNN |

97.5% |

|

2 |

2018 |

• Fuzzy Linear Discriminant Analysis (LDA) • Hough Transform • Inverse Perspective Mapping (IPM) |

IoU |

• Combining IPM and K-means Clustering |

Above 90% |

|

3 |

2019 |

• LiDAR • Stereo Camera • Instance Segmentation |

mIoU (mean Intersection over Union) |

• Cascaded CNN • Mask R-CNN |

95.24% |

|

4 |

2019 |

• HoG • Polynomial Regression • Hybrid Regression and Semantic Segmentation |

MAE |

• MobilenetV2 |

91.83% |

|

5 |

2020 |

• Optical Cameras • Fully Convolutional Network (FCN) |

Not Available |

• Dynamic Mode Decomposition (DMD) |

98.03% |

When compared to the other methods, the accuracy results show that using FCN methods with the DMD algorithm is the best.

5. Conclusions and Recommendations

In conclusion, seven major academic databases have been used (IEEE Xplore, Elsevier, Web of Knowledge, INSPEC, Springer, ACM Digital Library, ScienceDirect) to discover relevant literature that had recently been published. The total number of publications that have been studied exceeds 200, but only 70 of them were chosen according to the criteria of up-to-date information, the most significant AV system, applying vision approaches and IoT technologies, using smart sensors and actuators.

Many people are unclear about when autonomous vehicles will become effective in addressing transportation issues. According to optimistic predictions, autonomous cars may become sufficiently reliable, inexpensive, and commonplace by 2030–2035 to supplant most human driving, resulting in significant cost savings and advantages (Litman, 2020). However, there are valid reasons to be cautious. One of the main aspects of the motivation for the development of autonomous vehicles was to reduce the number of traffic accidents and to eliminate human factors as a cause of accidents. The idea is to have an autonomous vehicle that would be more dependable than a human. Vehicles must not only copy human behavior but also outperform humans to achieve this high-demand task.

The IoV is a subset of the IoT. It has become a critical platform for information exchange between vehicles, humans, and roadside infrastructure. The IoV is soon to become an essential part of our lives, allowing us to construct intelligent transportation systems free of road accidents, traffic lights, and other related issues. It would provide millions of people with more helpful, comfortable, and safe traffic services. With the rise of autonomous vehicles in recent years, a new trend has developed to use a variety of smart approaches and technologies to increase the performance and quality of autonomous decision-making. Combining various computer vision techniques with IoT in AV solutions, results in a high-performance embedded system that can be deployed in the environment to provide a more dynamic and resilient control system. Furthermore, computer vision has the potential to obtain higher-level information than other systems that rely on data from radar, lidar, ultrasonic, and other sensors. This is because these greater levels of information are important for designing systems in complicated environments, such as driver monitoring, pedestrian detection, traffic sign recognition, and so on. As has been discussed above, selecting the appropriate vision approach is entirely dependent on the type of AV system and the system's parameters. In the pedestrian detection system, for example, it was found that CNN and MobileNets are the best algorithms to use.

Compliance with Ethical Standards

Conflicts of Interest/ Competing Interests: The authors declare that the publishing of this review article does not involve any conflicts of interest.

References

Agarwal, P. K., Kumar, P., and Singh, H., 2020. Causes and Factors in Road Traffic Accidents at a Tertiary Care Center of Western Uttar Pradesh. In Medico Legal Update, 20(1): 38–4.

Ahmad, I., and Pothuganti, K., 2020. Design and Implementation of Real Time Autonomous Car by using Image Processing and IoT. 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 107–113.

Aravind, H., Sivraj, P., and Ramachandran, K. I., 2020. Design and Optimization of CNN for Lane Detection. In 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), IEEE, 1–6.

Banerjee, A., Chakraborty, C., Kumar, A., and Biswas, D., 2020. Emerging Trends in IoT and Big Data Analytics for Biomedical and Health Care Technologies. In Handbook of Data Science Approaches for Biomedical Engineering, 121–152.

Cao, J., Song, C., Peng, S., Xiao, F., and Song, S., 2019. Improved Traffic Sign Detection and Recognition Algorithm for Intelligent Vehicles. in Sensors, 19(18): 4021–4042.

Celesti, A., Galletta, A., Carnevale, L., Fazio, M., Ĺay-Ekuakille, A., and Villari, M., 2018. An IoT Cloud System for Traffic Monitoring and Vehicular Accidents Prevention Based on Mobile Sensor Data Processing. in IEEE Sensors Journal, 18(12): 4795–4802.

Cheng, X., Zhang, R., and Yang, L., 2019. Wireless Toward the Era of Intelligent Vehicles. In IEEE Internet of Things Journal, 6(1): 188–202.

Chetouane, A., Mabrouk, S., Jemili, I., and Mosbah, M., 2020. Vision-based Vehicle Detection for Road Traffic Congestion Classification. Concurrency and Computation: Practice and Experience, e5983.

Choubey, P. C. S., and Verma, R., 2020. Vehicle Accident Detection, Prevention and Tracking System. International Research Journal of Engineering and Technology (IRJET), 7(8): 2658–2663.

Ciuntu, V., and Ferdowsi, H., 2020. Real-Time Traffic Sign Detection and Classification Using Machine Learning and Optical Character Recognition. In 2020 IEEE International Conference on Electro Information Technology (EIT), 480–486.

Da Xu, L., He, W., and Li, S., 2014. Internet of Things in Industries: A Survey, IEEE Transactions on Industrial Informatics, 10(4): 2233–2243.

Dhawale, R. Y., and Gavankar, N. L., 2019. Lane Detection and Lane Departure Warning System using Color Detection Sensor. 2019 2nd International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, Kerala, India.

Eddie, E. E., Fabricio, D. E., Franklin, M. S., Paola, M. V., and Eddie D. G., 2018. Real-time Driver Drowsiness Detection based on Driver’s Face Image behavior using a System of Human Computer Interaction Implemented in a Smartphone. In International Conference on Information Technology & Systems. Springer, Cham.

Elbasani, E., Siriporn, P., and Choi, J. S., 2020. A Survey on RFID in Industry 4.0. In Internet of Things for Industry 4.0, Springer, Cham, 1–16.

Elbery, A., Hassanein, H. S., Zorba, N., and Rakha, H. A., 2020. IoT-Based Crowd Management Framework for Departure Control and Navigation. In IEEE Transactions on Vehicular Technology.

Fernando, W. U. K., Samarakkody, R. M., and Halgamuge, M. N., 2020. Smart Transportation Tracking Systems based on the Internet of Things Vision. In Connected Vehicles in the Internet of Things, Springer, Cham, 143–166.

Garg, T., Kagalwalla, N., Churi, P., Pawar, A., and Deshmukh, S., 2020. A Survey on Security and Privacy Issues in IoV. International Journal of Electrical & Computer Engineering, 10(5): 5409, 5419.

Hamid, K., Bahman, J., Mahdi, J. M., and Reza, N. J., 2020. Artificial Intelligence and Internet of Things for Autonomous Vehicles. In Nonlinear Approaches in Engineering Applications, Springer, Cham, 39–68.

Hanan, E., 2019. Internet of Things (IoT), Mobile Cloud, Cloudlet, Mobile IoT, IoT Cloud, Fog, Mobile Edge, and Edge Emerging Computing Paradigms: Disambiguation and Research Directions. Journal of Network and Computer Applications, 128: 105–140.

Hirz, M., and Walzel, B., 2018. Sensor and Object Recognition Technologies for Self-Driving Cars. in Computer-Aided Design and Applications, 15(4): 501–508.

Hsu, S., Huang, C. L., and Cheng, C. H., 2018. Vehicle Detection using Simplified Fast R-CNN. In 2018 International Workshop on Advanced Image Technology (IWAIT), IEEE, 1–3.

Izquierdo-Reyes, J., Ramirez-Mendoza, R. A., Bustamante-Bello, M. R., Navarro-Tuch, S., and Avila-Vazquez, R., 2018. Advanced Driver Monitoring for Assistance System (ADMAS). International Journal on Interactive Design and Manufacturing (IJIDeM), 12(1): 187–197.

Kang, J., Yu, R., Huang, X., and Zhang, Y., 2018. Privacy-Preserved Pseudonym Scheme for Fog Computing Supported Internet of Vehicles. In IEEE Transactions on Intelligent Transportation Systems, 19(8): 2627–2637.

Khalifa, A. B., Alouani, I., Mahjoub, M. A., and Amara, N. E. B., 2020. Pedestrian Detection Using a Moving Camera: A Novel Framework for Foreground Detection. In Cognitive Systems Research, 60: 77–96.

Kim, B., Yuvaraj, N., Sri, P., Santhosh, K. R. R., and Sabari, A., 2020. Enhanced Pedestrian Detection using Optimized Deep Convolution Neural Network for Smart Building Surveillance. Soft Computing, 24: 17081–17092.

Kocić, J., Jovičić, N., and Drndarević, V., 2018. Sensors and Sensor Fusion in Autonomous Vehicles. 2018 26th Telecommunications Forum (TELFOR), Belgrade, 420–425.

Koresh, M. H. J. D., and Deva, J., 2019. Computer Vision Based Traffic Sign Sensing for Smart Transport. Journal of Innovative Image Processing (JIIP), 1(1): 11–19.

Kumar, A., and Patra, R., 2018. Driver drowsiness monitoring system using visual behaviour and machine learning. 2018 IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE), Penang, 339–344.

Lex, F., Daniel, E. B., Michael, G., William, A., Spencer, D., Bendikt, J., Jack, T., Aleksandr, P., Julia, K., Li, D., Sean, S., Alea, M., Andrew, S., Anthony, P., Bobbie, D. S., Linda, A., Bruce, M., and Bryan, R., 2019. MIT Advanced Vehicle Technology Study: Large-Scale Naturalistic Driving Study of Driver Behavior and Interaction with Automation. In IEEE Access, 7: 102021–102038.

Li, G., Wang, Z., Fei, X., Li, J., Zheng, Y., Li, B., and Zhang, T., 2021. Identification and Elimination of Cancer Cells by Folate-Conjugated CdTe/CdS Quantum Dots Chiral Nano-Sensors. Biochemical and Biophysical Research Communications, 560: 199–204.

Li, W. L., Li, X. G., Qin, Y. Y., Ma, D., Cui, W., and Province, J., 2020. Real-time Traffic Sign Detection Algorithm Based on Dynamic Threshold Segmentation and SVM. Journal of Computers, 31(6): 258–273.

Litman, T., 2020. Autonomous Vehicle Implementation Predictions: Implications for Transport Planning.

Liu, J., Lou, L., Huang, D., Zheng, Y., and Xia, W., 2018. Lane Detection based on Straight Line Model and K-means Clustering. In 2018 IEEE 7th Data Driven Control and Learning Systems Conference (DDCLS), IEEE, 527–532.

Liu, Z., Peng, Y., and Hu, W., 2020. Driver Fatigue Detection based on Deeply-Learned Facial Expression Representation. In Journal of Visual Communication and Image Representation, 71: 102723.

Lu, H., Liu, Q., Tian, D., Li, Y., Kim, H., and Serikawa, S., 2019. The Cognitive Internet of Vehicles for Autonomous Driving. In IEEE Network, 33(3): 65–73.

Minhas, A. A., Jabbar, S., Farhan, M., and ul Islam, M. N., 2019. Smart Methodology for Safe Life on Roads with Active Drivers based on Real-Time Risk and Behavioural Monitoring. Journal of Ambient Intelligence and Humanized Computing, Springer, 1–13.

Minovski, D., Åhlund, C., and Mitra, K., 2020. Modeling Quality of IoT Experience in Autonomous Vehicles. IEEE Internet of Things Journal, 7(5): 3833–3849.

Narote, S. P., Bhujbal, P. N., Narote, A. S., and Dhane, D. M., 2018. A Review of Recent Advances in Lane Detection and Departure Warning System. In Pattern Recognition, 73: 216–234.

Ng, J. R., Wong, J. S., Goh, V. T., Yap, W. J., Yap, T. T. V., and Ng, H., 2019. Identification of Road Surface Conditions using IoT Sensors and Machine Learning. Computational Science and Technology, Springer, Singapore, 481.

Olanrewaju, R. F., Fakhri, A. S. A., Sanni, M. L., and Ajala, M. T., 2019. Robust, Fast and Accurate Lane Departure Warning System using Deep Learning and Mobilenets. In 2019 7th International Conference on Mechatronics Engineering (ICOM), IEEE, 1–6.

Qu, H., Yuan, T., Sheng, Z., and Zhang, Y., 2018. A Pedestrian Detection Method based on YOLOv3 Model and Image Enhanced by Retinex. 2018 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), IEEE.

Philip, J. M., Durga, S., and Esther, D., 2021. Deep Learning Application in IoT Health Care: A survey. In Intelligence in Big Data Technologies-Beyond the Hype, Springer, Singapore, 199–208.

Pizzati, F., Allodi, M., Barrera, A., and García, F., 2019. Lane Detection and Classification using Cascaded CNNs. In International Conference on Computer Aided Systems Theory, Springer, Cham, 95–103.

Pranav, K. B., and Manikandan, J., 2020. Design and Evaluation of a Real-time Pedestrian Detection System for Autonomous Vehicles. In 2020 Zooming Innovation in Consumer Technologies Conference (ZINC), IEEE, 155–159.

Preethaa, K. S., and A. Sabari, A., 2020. Intelligent Video Analysis for Enhanced Pedestrian Detection by Hybrid Metaheuristic Approach. Soft Computing, 1–9.

Rahul, P. K., Abhishek, S. K., Zeeshaan, W. S., Vaibhav, U. B., and Nasiruddin, M., 2020. IoT based Self Driving Car. International Research Journal of Engineering and Technology (IRJET), 7(3): 5177–5181.

Rani, R., Kumar, N., Khurana, M., Kumar, A., and Barnawi, A., 2021. Storage as a Service in Fog Computing: A Systematic Review. Journal of Systems Architecture, 102033.

Ravikumar, S., and Kavitha, D., 2021. IOT based Autonomous Car Driver Scheme based on ANFIS and Black Widow Optimization. Journal of Ambient Intelligence and Humanized Computing, 1–14.

Sadiku, M. N., Tembely, M., and Musa, S. M., 2018. Internet of Vehicles: An Introduction. International Journal of Advanced Research in Computer Science and Software Engineering, 8(1): 11.

Sakhare, K. V., Tewari, T., and Vyas, V., 2020. Review of Vehicle Detection Systems in Advanced Driver Assistant Systems. Archives of Computational Methods in Engineering, 591–610.

Sastry, A., Bhargav, K., Pavan, K. S., and Narendra, M., 2020. Smart Street Light System using IoT. International Research Journal of Engineering and Technology (IRJET), 7(3): 3093–3097.

Savaş, B. K., and Becerikli, Y., 2020. Real Time Driver Fatigue Detection System based on Multi-Task ConNN. In IEEE Access, 8: 12491–12498.

Sharma, S., 2021. Towards Artificial Intelligence Assisted Software Defined Networking for Internet of Vehicles. In Intelligent Technologies for Internet of Vehicles. Springer, Cham, 191–222.

Shashank, P., Pavan, M., Niharika, P., Jalakam, S., and Sathweek, B., 2020. Machine Learning Based Water Quality Checker and pH Verifying Model. European Journal of Molecular & Clinical Medicine, 7(11): 2220–2228.

Singh, D., Tripathi, G., and Jara, A.J., 2014. A Survey of Internet-of-Things: Future Vision, Architecture, Challenges and Services, 2014 IEEE World Forum on Internet of Things (WF-IoT). IEEE.

Srivastava, M., and Kumar, R., 2021. Smart Environmental Monitoring Based on IoT: Architecture, Issues, and Challenges. In Advances in Computational Intelligence and Communication Technology, Springer, 349–358.

Sultana, T., and Wahid, K. A., 2019. IoT-Guard: Event-Driven Fog-Based Video Surveillance System for Real-Time Security Management. In IEEE Access, 7: 134881–134894.

Tsai, C., Ching-Kan, T., Ho-Chia, T., and Jiun-In, G., 2018. Vehicle Detection and Classification based on Deep Neural Network for Intelligent Transportation Applications. In 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), IEEE, 1605–1608.

Uma, S., and Eswari, R., 2021. Accident Prevention and Safety Assistance using IOT and Machine Learning. Journal of Reliable Intelligent Environments, 1–25.

Varma, B., Sam, S., and Shine, L., 2019. Vision Based Advanced Driver Assistance System Using Deep Learning. 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 1–5.

Wang, J., Lim, M. K., Wang, C., and Tseng, M. L., 2021. The evolution of the Internet of Things (IoT) over the past 20 years. Computers & Industrial Engineering, 155: 107174.

Wei, Y., Tian, Q., Guo, J., Huang, W., and Cao, J., 2019. Multi-Vehicle Detection Algorithm through Combining Harr and HOG Features. Mathematics and Computers in Simulation, 155: 130–145.

Whitmore, A., Agarwal, A., and Da Xu, L., 2015. The Internet of Things-A Survey of Topics and Trends, Information Systems Frontiers, 17(2): 261–274.

World Health Organization (WHO)., 2020. Global Status Report on Road Safety. Accessed on: 28th October 2021. [Online]. Available: https://www.who.int/violence_injury_prevention/road_safety_status/report/en/.

Xiang, X., Zhai, M., Lv, N., and El Saddik, A., 2018. Vehicle Counting based on Vehicle Detection and Tracking from Aerial Videos. In Sensors, 18(8): 2560.

Zahid, A., Iniyavan, R., and Madhan, M. P., 2019. Enhanced vulnerable pedestrian detection using deep learning. International Conference on Communication and Signal Processing (ICCSP), IEEE, 0971–0974.

Zaidan, A. A., and Zaidan, B. B., 2020. A Review on Intelligent Process for Smart Home Applications based on IoT: Coherent Taxonomy, Motivation, Open Challenges, and Recommendations. Artificial Intelligence Review, 53(1): 141–165.

Zang, J., Zhou, W., Zhang, G., and Duan, Z., 2018. Traffic Lane Detection using Fully Convolutional Neural Network. In 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), IEEE, 305–311.

Zhang, J., Xie, Z., Sun, J., Zou, X., and Wang, J., 2020. A Cascaded R-CNN with Multiscale Attention and Imbalanced Samples for Traffic Sign Detection. In IEEE Access, 8: 29742–29754.

Zhao, C., Li, L., Xin, P., Zhiheng, L., Fei-Yue, W., and Xiangbin, W., 2021. A Comparative Study of State-of-the-art Driving Strategies for Autonomous Vehicles. Accident Analysis & Prevention. 150: 105937.

Authors’s Biography

Mohammed Qader Kheder was born in Ranyah/ Sulaimani city- Kurdistan Region/ Iraq. He earned a BSc degree at the University of Sulaimani (2008), MSc degree in the United Kingdom in advanced computer science (2011) at the University of Huddersfield. He also by his researches could pass from Assistant Lecturer to Lecturer at the University of Sulaimani. He is a Ph.D. student whose study focuses on utilizing IoT technology and deep learning techniques in autonomous vehicles. His research interest includes IoT, machine learning, wireless access networks, and vision tactics.

Prof. Aree Ali Mohammed was born in Sulaimani city–Kurdistan Region Iraq. He obtained a BSc degree at the University of Mousle (1995), an MSc degree in France in Computer Science (2003), and a Ph.D. In multimedia systems at the Univesity of Sulaimani (2008). He directed Information Technology Directorate for four years (2010– 2014) and the head of the computer science department/ college of Science / University of Sulaimani for seven years. The main field of interest is multimedia system applications for processing, compression, and security. Many papers have been published in scientific journals throughout the world.